Introduction to Kafka Security

Dan Weston

Senior Curriculum Developer

Introduction to Kafka Security

Security is a primary consideration for any system design, and Apache Kafka® is no exception. Out of the box, Kafka has relatively little security enabled, so you need a basic familiarity with authentication, authorization, encryption, and audit logs in Kafka in order to securely put your system into production. This course has been created to quickly get you up to speed on what you need to know.

To secure your Apache Kafka-based system, you must formulate your overall strategy according to several factors: your internal corporate policy, the industry or regulatory requirements that govern your data processing capabilities, and finally the environment in which you plan to deploy your solution.

Of course, adding security often brings performance costs. For example, the CPU overhead of encrypting data can be significant when using a high throughput system like Kafka, up to 30% in some cases—even with the optimizations that Kafka uses to reduce this cost. On the other hand, you can't focus purely on performance, or you will probably wind up with a poorly protected system.

Securing Data Streams in Kafka

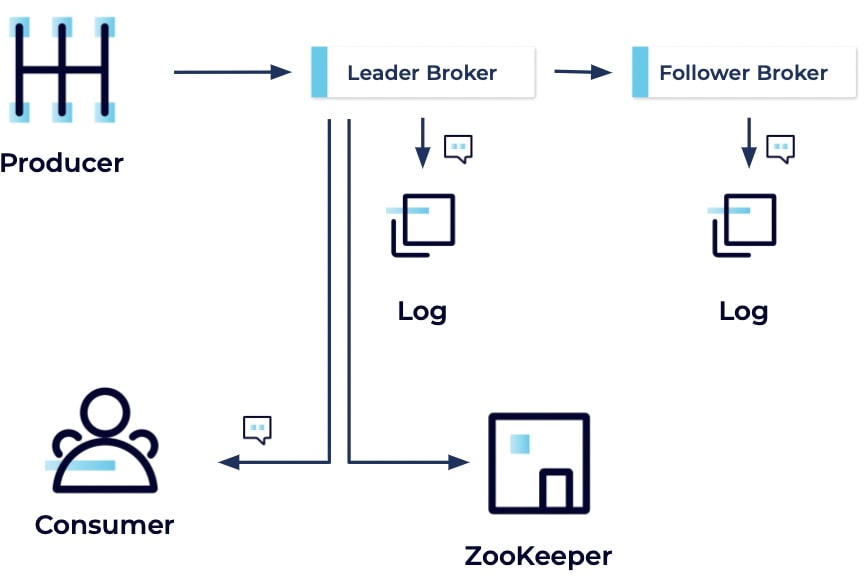

A good way to conceptualize the parts that need securing is to consider the way that data (i.e., a message) flows through your Kafka system:

The producer begins the message's journey by creating and sending it to the cluster. The message is received by the leader broker, which writes the message to its local log file. Then a follower broker fetches the message from the leader and similarly writes it to its local log file. The leader broker updates the partition state in ZooKeeper and finally, a consumer receives the message from the broker.

How Kafka Security Works

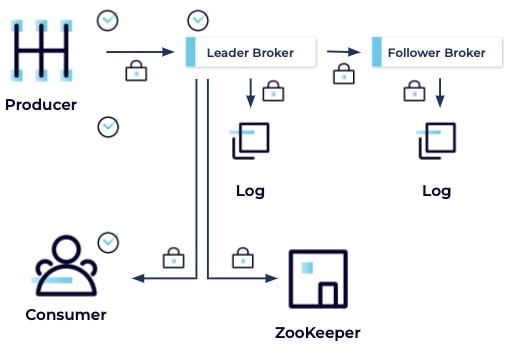

Each step of this data journey requires that a decision be made. For example, the broker authenticates the client to make sure the message is actually originating from the configured producer. Likewise, the producer verifies that it has a secure connection to the broker before sending any messages. Then before the leader broker writes to its log, it makes sure that the producer is authorized to write to the desired topic. This check also applies to the consumer – it must be authorized to read from the topic.

Data Security and Encryption

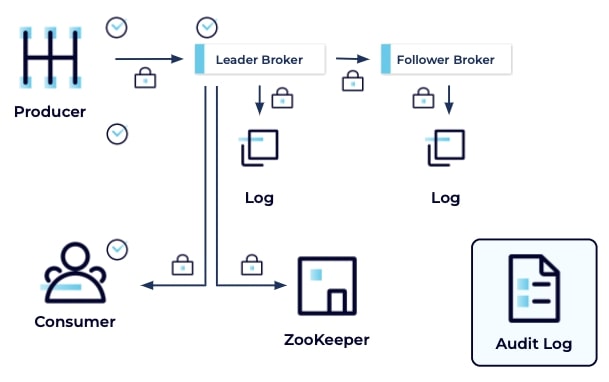

Throughout the system, data should be encrypted so that it can't be read in transit or at rest. Additionally, all operations should be recorded in an audit log so that there is an audit trail in case something happens, or the behavior of the cluster needs to be verified.

In conclusion, you need to choose the security measures for your system according to the corporate, industry, and environmental requirements particular to your scenario. The informational modules presented in this course detail the tools available to you with respect to Kafka and adjacent technologies, and the exercises teach you how to go about implementing them.

- Previous

- Next

Use the promo code 101SECURITY & CONFLUENTDEV1 to get $25 of free Confluent Cloud usage and skip credit card entry.

Introduction to Kafka Security

Security is a primary consideration for any system design and your use of Apache Kafka is no exception. When deciding how to secure your Kafka-based system, The degree of security you choose to apply can vary based on several factors: On your internal corporate policy, on any industry or regulatory requirements that govern your data processing capabilities, and on the environment in which you're deploying your solution. A development environment that doesn't contain sensitive data will often not need to be as secure as a production environment, for example. You can of course, dial the security up to 10 or 11 across all deployments and be done with it, but adding security to a system comes with a performance cost. Notably the CPU overhead of encrypting data can be significant when using a high throughput system like Apache Kafka, up to 30% in some cases. While Kafka makes many internal optimizations to reduce this cost, it is nonetheless noticeable. On the other hand, focus too much on performance and you run the risk of leaving some important part of the system poorly protected. Before we get into the details, let's look at how data flows through Kafka and the places where we need to consider the security aspects of your system. We receive a message from our producers. That message is sent to the leader broker, which writes the message to the local log file. The follower broker fetches the message from the leader and also writes its message to the log file. The leader broker updates the partition state in ZooKeeper, keeping the Insync replicas updated. Last, we have a consumer that receives the message from the broker. With each step, there's a security decision that needs to be made. First, the broker authenticates the client to ensure the message is really coming from the configured producer. Our producer should then verify it has a secure connection to the configured broker before sending any messages. All of our data should be encrypted so it can't be read in transit. In addition, all of our data stores should be encrypted as well for our data at rest. Before we commit the message to the log, the leader broker should verify that the producer has the correct access permission to write to the topic. The same goes for our consumer. Does it have the access it needs to read from the topic? We should also be recording these operations to an audit log, giving us an audit trail in case something unexpected happens or we need to verify our clusters behaving as it should. The mix of corporate, industry, and environment requirements particular to your scenario determine what security measures you ought to apply to your system. This course covers the tools Kafka provides to help you meet your specific security needs and the labs show you how to apply these tools and implement the security appropriate to your workload. By the end of this course, you'll have gained an understanding of which tools to use across the range of scenarios.

Be the first to get updates and new content

We will only share developer content and updates, including notifications when new content is added. We will never send you sales emails. 🙂 By subscribing, you understand we will process your personal information in accordance with our Privacy Statement.