Testing Apache Kafka®

Learn about the ecosystem of tools for testing your Apache Kafka® applications

Apache Kafka® has extensive tooling that help developers write good tests and build continuous integration pipelines:

-

Ecosystem of client languages: You can develop applications in your preferred programming language with your own IDEs and test frameworks: Java, Go, Python, .NET, REST, SQL, Node.js, C/C++, and more.

-

Easy to unit test: Kafka’s native log-based processing and libraries for simulated Kafka brokers and mock clients make input/output testing using real datasets simple.

-

Tools to test more complicated applications: Tools like the TopologyTestDriver make it easy to test complex Kafka Streams applications without having to run Kafka brokers.

-

Integrations with common testing systems: Testcontainers, Docker, and Confluent Cloud

-

Access to the source: Apache Kafka is all open source, so you have hundreds of thousands of lines of code to learn from, including great tests.

-

Test with real production datasets: Use Kafka’s native API to copy data from prod to test, or mock datasets with the Datagen Connector, and define any serialization format you need: Avro, JSON, Protobuf.

Dev/Test/Prod Environments

Separate your development resources from the test resources from the production resources.

- GitOps: promote your application through Dev/Test/Prod environments, see our deeper dive on GitOps.

- GitHub Actions: automate your pipelines, see the GitHub Actions example for Kafka client applications

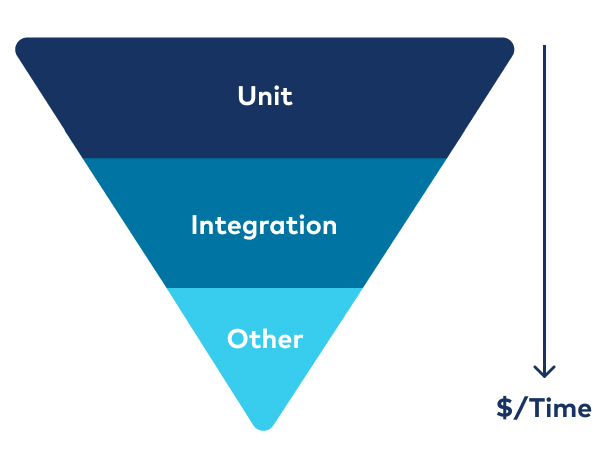

Types of Testing

| Unit testing: Cheap and fast to run, giving developers a fast feedback cycle. Their fine granularity means the exact location of the problem will be clearly defined. Unit tests typically run as a single process and do not use network or disk. |

|

| Integration testing: For testing with other components like a Kafka broker, you can run with simulated Kafka components or real ones. Sometimes integration tests are slower to run and failures are harder to troubleshoot than they are in unit tests. | |

| Performance, soak, chaos testing: For optimizing your client applications, ensuring long-running code, and resilience against failures |

Clients that write to Kafka are called producers, and clients that read from Kafka are called consumers. Often, applications and services act as both producers and consumers. The streaming database ksqlDB and the Kafka Streams application library are also clients as they have embedded producers and consumers. Here are various available test utilities you can use in testing your client applications:

| ksqlDB | Kafka Streams | JVM Producer & Consumer | librdKafka Producer & Consumer | |

|---|---|---|---|---|

| Unit Testing | ksql-test-runner | TopologyTestDriver | MockProducer, MockConsumer | rdkafka_mock |

| Integration Testing | Testcontainers | trivup | ||

| Confluent Cloud | ||||

Learn more about application testing

Schema Registry and Data Formats like Avro, Protobuf, JSON Schema

Without schemas, data contracts are defined only loosely and out-of-band (if at all), which carries the high risk that consumers of data will break as producers change their behavior over time. Data schemas help to put in place explicit “data contracts” to ensure that data written by Kafka producers can always be read by Kafka consumers, even as producers and consumers evolve their schemas.

This is where Confluent Schema Registry helps: It provides centralized schema management and compatibility checks as schemas evolve. It supports Avro, Protbuf, and JSON Schema.

| Schema Registry | |

|---|---|

| Unit Testing | MockSchemaRegistryClient |

| Integration Testing | EmbeddedSingleNodeKafkaCluster, Testcontainers |

| Compatibility Testing | Schema Registry Maven Plugin |

Learn more about application testing

Other Testing

Harden your application with:

- Performance testing: Optimize your app for Throughput, Latency, Durability, or Availability

- Soak testing: Run code for longer periods of time to surface memory leaks, etc

- Chaos testing: Inject random failures and bottlenecks

You can benchmark with basic Apache Kafka command line tools like kafka-producer-perf-test and kafka-consumer-perf-test (docs). For the other types of testing, you can consider: Trogdor, TestContainers modules like Toxiproxy, and Pumba for Docker environments.

Using Realistic Data

To dive into more involved scenarios, test your client application, or perhaps build a cool Kafka demo for your teammates, you may want to use more realistic datasets. One option may be to copy data from a production environment to a test environment (Cluster Linking, Confluent Replicator, Kafka's Mirror Maker, etc.) or pull data from another live system.

Alternatively, you can generate mock data for your topics with predefined schema definitions, including complex records and multiple fields. If you use the Datagen Connector, you can format your data as one of Avro, JSON, or Protobuf.