Jay Kreps

Co-creator of Apache Kafka & CEO of Confluent

A platform for real-time event streaming. Capture, store, process, and query event data in real time with Confluent, built on Apache Kafka.

$ # Capture changes in real time from a Postgres database.

$ cat postgres.json

{

"name": "PostgresCdcSourceConnector_0"

"config": {

"connector.class": "PostgresCdcSource",

"name": "PostgresCdcSourceConnector_0",

"kafka.auth.mode": "KAFKA_API_KEY",

"kafka.api.key": "****************",

"kafka.api.secret": "*************",

"database.hostname": "my-db-name",

"database.port": "5532",

"database.user": "admin",

"database.password": "***********",

"database.dbname": "customers",

"database.server.name": "customers",

"database.sslmode": "require",

"output.data.format": "AVRO",

"output.key.format": "AVRO",

"tasks.max": "1"

}

}

$ confluent connect create --config postgres.json --cluster lkc-123456

Created connector PostgresReader lcc-gq926m

$ # Process streams of data in-flight with SQL.

$ ksql-cli

ksqldb> CREATE STREAM tierOnePayments AS SELECT * FROM payments WHERE tier='1'; ksqldb> exit

$ # Write real-time streams out to BigQuery.

$ confluent connect create --config bigquery.json --cluster lkc-123456

Created connector BigQueryWriter lcc-gq926m

$ # Launch a Spring Boot microservice, powered by streams.

$ gradle bootRun --args='--background --cluster lkc-123456'

$ # Detect and store changes on S3 bucket objects.

$ confluent connect create --config s3.json --cluster lkc-123456

Created connector S3Writer lcc-gq926m

$ # Link two Kafka clusters together for geographical replication.

$ confluent kafka link create east-west-link --cluster lkc-123456 --source-cluster lkc-245839 Link created.

$ # Trigger a Serverless AWS Lambda function on every event.

$ confluent connect create --config lambda.json --cluster lkc-123456

Created connector LambdaWriter lcc-gq926mCapture and store events as soon as they occur. Forward them to Kafka with pre-built connectors, or directly use the APIs if that’s easier. If you’re just getting started, generate sample events to see how it all works.

$ confluent connect create --config my-config.json --cluster lkc-123456

Created connector DatabaseReader lcc-gq926m

$ confluent connect list

ID | Name | Status | Type | Trace

----------------+------------------+-----------+--------+------+

| lcc-gq926m | DatabaseReader | RUNNING | source |Execute code as events arrive. Derive new streams from existing streams. Power applications, microservices, and data pipelines in real time. Program declaratively with SQL, imperatively with Java, or anything else that can speak to Kafka.

CREATE STREAM clicks_transformed AS

SELECT upper_case(userid), page, action

FROM clickstream c

WHERE action != ‘delete’

EMIT CHANGES;Sink event streams to any of your systems. Use pre-built connectors, or leverage the client APIs if you need to do something custom. Peek into streams using the CLI for easy development.

$ confluent connect create --config my-config.json --cluster lkc-123456

Created connector S3Writer lcc-pc346q

$ confluent connect list

ID | Name | Status | Type | Trace

----------------+------------------+-----------+--------+------+

| lcc-pc346q | S3Writer | RUNNING | sink |Kafka’s decoupled protocol gets you exactly the data you want. Data gets broadcast so all consumers see the same stream. Rewindability lets consumers read data at different rates. Connect it all together with cluster links for replication.

Properties props = new Properties();

props.put("bootstrap.servers", "pkc-2rtq7.us-west4.gcp.confluent.cloud:9092");

props.put("group.id", "logging-group");

Consumer<String, String> consumer = new KafkaConsumer<String, String>(props);

consumer.subscribe(Arrays.asList(topic));

while (true) {

ConsumerRecords<String, String> records = consumer.poll(100);

for (ConsumerRecord<String, String> record : records) {

System.out.printf("offset = %d, key = %s, value = %s\n",

record.offset(), record.key(), record.value());

}

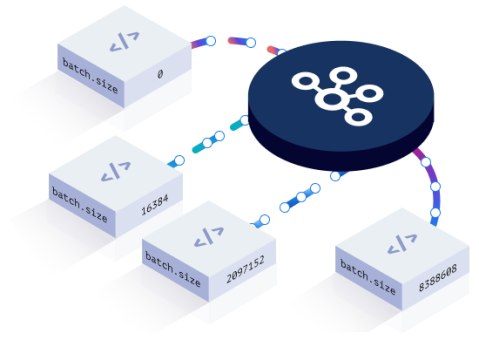

}We sweat the milliseconds, too. Precisely control throughput, latency, and fault tolerance with a few simple configuration settings.

Properties properties = new Properties();

properties.setProperty(ProducerConfig.BATCH_SIZE_CONFIG, “65536”);properties.setProperty(ProducerConfig.LINGER_MS_CONFIG, "20");

properties.setProperty(ProducerConfig.ACKS_CONFIG, "all");

KafkaProducer<String, String> producer = new KafkaProducer<>(properties);Mission-critical workloads require strong guarantees. Kafka's support for transactions gives you the certainty you need.

Confluent Cloud is a fully managed, cloud-native service for Apache Kafka® and its ecosystem. Build your business and forget about operating infrastructure.

The "modern" data stack doesn't fix modern problems. We have more data systems, platforms, and apps than we could have ever imagined. More than anything, they all need to connect and work together. Kafka and Confluent were built from the ground up to solve this problem.

Co-creator of Apache Kafka & CEO of Confluent

Build anything from data pipelines to applications. Pick your preferred language and get started!

We will only share developer content and updates, including notifications when new content is added. We will never send you sales emails. 🙂 By subscribing, you understand we will process your personal information in accordance with our Privacy Statement.