Getting Started with Kafka Streams

Sophie Blee-Goldman

Senior Software Engineer (Presenter)

Bill Bejeck

Integration Architect (Author)

Getting Started

To understand Kafka Streams, you need to begin with Apache Kafka—a distributed, scalable, elastic, and fault-tolerant event-streaming platform.

Logs, Brokers, and Topics

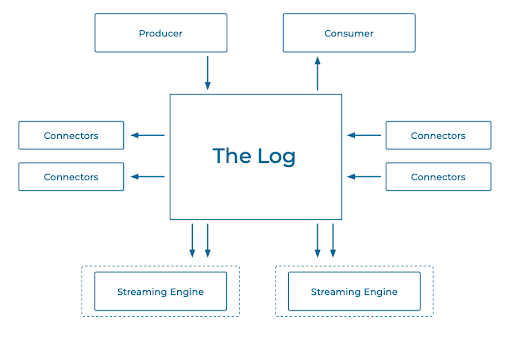

At the heart of Kafka is the log, which is simply a file where records are appended. The log is immutable, but you usually can't store an infinite amount of data, so you can configure how long your records live.

The storage layer for Kafka is called a broker, and the log resides on the broker's filesystem. A topic is simply a logical construct that names the log—it's effectively a directory within the broker's filesystem.

Getting Records into and out of Brokers: Producers and Consumers

You put data into Kafka with producers and get it out with consumers: Producers send a produce request with records to the log, and each record, as it arrives, is given a special number called an offset, which is just the logical position of that record in the log. Consumers send a fetch request to read records, and they use the offsets to bookmark, like placeholders. For example, a consumer will read up to offset number 5, and when it comes back, it will start reading at offset number 6. Consumers are organized into groups, with partition data distributed among the members of the group.

Connectors

Connectors are an abstraction over producers and consumers. Perhaps you need to export database records to Kafka. You configure a source connector to listen to certain database tables, and as records come in, the connector pulls them out and sends them to Kafka. Sink connectors do the opposite: If you want to write records to an external store such as MongoDB, for example, a sink connector reads records from a topic as they come in and forwards them to your MongoDB instance.

Streaming Engine

Now that you are familiar with Kafka's logs, topics, brokers, connectors, and how its producers and consumers work, it's time to move on to its stream processing component. Kafka Streams is an abstraction over producers and consumers that lets you ignore low-level details and focus on processing your Kafka data. Since it's declarative, processing code written in Kafka Streams is far more concise than the same code would be if written using the low-level Kafka clients.

Kafka Streams is a Java library: You write your code, create a JAR file, and then start your standalone application that streams records to and from Kafka (it doesn't run on the same node as the broker). You can run Kafka Streams on anything from a laptop all the way up to a large server.

Say you have sensors on a production line, and you want to get a readout of what's happening, so you begin to work with the sensors' data. You need to pay special attention to the temperature (whether it’s too high or too low) and the weight (are they making it the right size?). You might stream records like the example below into a Kafka topic:

{

"reading_ts": "2020-02-14T12:19:27Z",

"sensor_id": "aa-101",

"production_line": "w01",

"widget_type": "acme94",

"temp_celsius": 23,

"widget_weight_g": 100

}You can then use the topic in all sorts of ways. Consumers in different consumer groups have nothing to do with each other, so you would be able to subscribe to the topic with many different services and potentially generate alerts.

Processing Data: Vanilla Kafka vs. Kafka Streams

As mentioned, processing code written in Kafka Streams is far more concise than the same code would be if written using the low-level Kafka clients. One way to examine their approaches for interacting with the log is to compare their corresponding APIs. In the code below, you create a producer and consumer, and then subscribe to the single topic widgets. Then you poll() your records, and the ConsumerRecords collection is returned. You loop over the records and pull out values, filtering out the ones that are red. Then you take the "red" records, create a new ProducerRecord for each one, and write those out to the widgets-red topic. Once you write those records out, you can have any number of different consumers.

public static void main(String[] args) {

try(Consumer<String, Widget> consumer = new KafkaConsumer<>(consumerProperties());

Producer<String, Widget> producer = new KafkaProducer<>(producerProperties())) {

consumer.subscribe(Collections.singletonList("widgets"));

while (true) {

ConsumerRecords<String, Widget> records = consumer.poll(Duration.ofSeconds(5));

for (ConsumerRecord<String, Widget> record : records) {

Widget widget = record.value();

if (widget.getColour().equals("red") {

ProducerRecord<String, Widget> producerRecord = new ProducerRecord<>("widgets-red", record.key(), widget);

producer.send(producerRecord, (metadata, exception)-> {…….} );

…Here is the code to do the same thing in Kafka Streams:

final StreamsBuilder builder = new StreamsBuilder();

builder.stream(“widgets”, Consumed.with(stringSerde, widgetsSerde))

.filter((key, widget) -> widget.getColour.equals("red"))

.to("widgets-red", Produced.with(stringSerde, widgetsSerde));You instantiate a StreamsBuilder, then you create a stream based off of a topic and give it a SerDes. Then you filter the records and write back out to the widgets-red topic.

With Kafka Streams, you state what you want to do, rather than how to do it. This is far more declarative than the vanilla Kafka example.

Errata

- The Confluent Cloud signup process illustrated in this video includes a step to enter payment details. This requirement has been eliminated since the video was recorded. You can now sign up without entering any payment information.

- Previous

- Next

Use the promo code STREAMS101 to get $25 of free Confluent Cloud usage

Be the first to get updates and new content

We will only share developer content and updates, including notifications when new content is added. We will never send you sales emails. 🙂 By subscribing, you understand we will process your personal information in accordance with our Privacy Statement.