How to Start Flink and Get Setup (Exercise)

Wade Waldron

Principal Software Practice Lead

How to Start Flink and Get Setup (Exercise)

In this exercise, you will be setting up Confluent Cloud and Flink for use in the rest of the course. You'll also download the project template that contains the basic code you will need to get started.

Download the Code

The exercises come with some pre-existing code. You have two options for accessing it: Local or Gitpod.

Local is a good choice if you want an environment to support future projects.

If you prefer to have a disposable environment or get stuck setting it up locally, then Gitpod is a good choice. If you are unfamiliar with Gitpod, the following video provides a brief introduction:

Introducing Gitpod for Confluent Developer

Local Development

For local development, you need a suitable Java development environment including.

- Java 11

- Maven

- An IDE such as IntelliJ, Eclipse, or VS Code

NOTE: It is critical that you use Java 11 when working on these exercises. Flink does not currently support anything other than Java 8 or 11. Furthermore, the exercise code assumes you are using Java 11 and may use features that are unavailable in Java 8. Make sure that your $JAVA_HOME points to a compatible version.

To easily switch between Java versions, we recommend using something like SDKMAN.

Clone or download the exercise code from Github:

Gitpod Development

For Gitpod development, use the following link:

When you open Gitpod, it will open a series of tabs.

- Simple Browser: This tab will be used to display the UI for Flink which has been installed in the environment. Leave it open.

- Java Extensions: This tab will suggest installing a JDK. You can close this tab since Java 11 is already installed.

- Welcome: This tab provides a few Gipod shortcuts. Feel free to close it.

You will also have four terminal tabs:

- Data Generator

- Flight Importer

- User Statistics

- Misc

You can use these terminals to execute portions of your application or run utility scripts.

You will still need to create a Confluent Cloud account and create a cluster.

Register for Confluent Cloud

Note: If you already have a Confluent Cloud account, you can skip ahead to Create a New Environment.

- Head over to the Confluent Cloud signup page and sign up for a new account.

-

Watch your inbox for a confirmation email and follow the link to proceed.

-

You will be asked to create a cluster. Feel free to ignore this. We'll be creating a cluster in a later step.

[Optional] Install the Confluent CLI

If you prefer to work from the command line, you can install the Confluent CLI by following the instructions here.

Then, refer to the Command Line Reference sections that follow many of the instructions below.

Create a New Environment

We will create a cloud environment specifically for this course. This will ensure isolation and allow for easy cleanup.

WARNING: To ensure the integrity of your production environment, do not use a production environment for this course, and do not use the environment you create for anything other than the course. We strongly recommend using a new environment with new credentials to protect yourself but also to provide easy cleanup.

- From the left-hand navigation menu or the right-hand main menu, select "Environments".

- Click + Add cloud environment

-

Name your environment building-flink-applications-in-java.

-

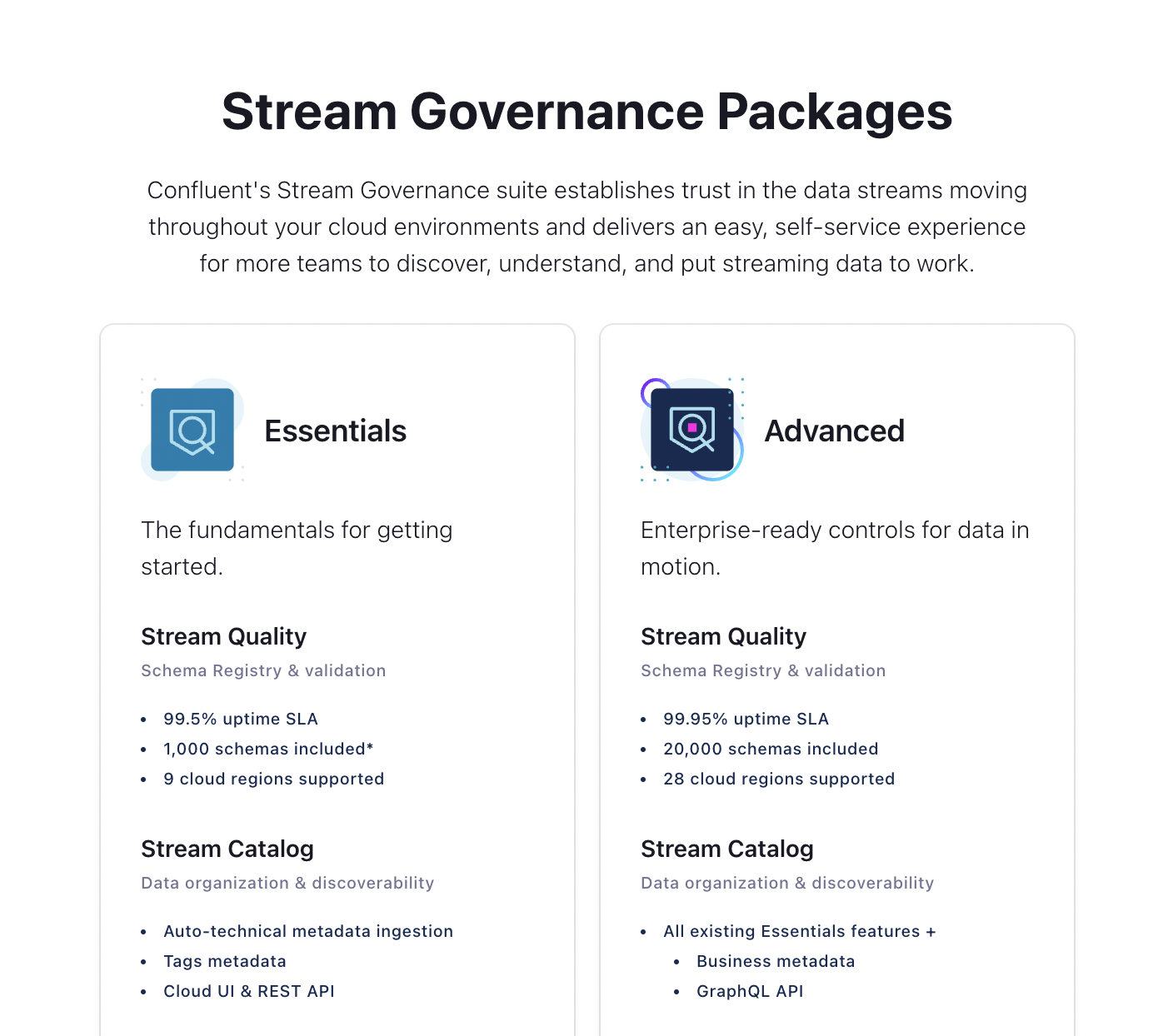

When offered a choice on which Stream Governance Package to use, select Essentials.

-

Select the cloud and region where you want to create your Schema Registry and Stream Catalog (i.e. where you will be storing the metadata).

- Note: To avoid cross-cloud/region traffic, you will want to use the same cloud/region for your Kafka cluster.

Command Line Reference

You can do this from the command line by running:

confluent environment create building-flink-applications-in-javaOnce your environment is created, you will need to make it the active environment.

If necessary, list the environments and locate the Id for the building-flink-applications-in-java environment.

confluent environment listNext, set it as the active environment:

confluent environment use <environment id>Create a Cluster

Next, we need to create a Kafka Cluster for the course.

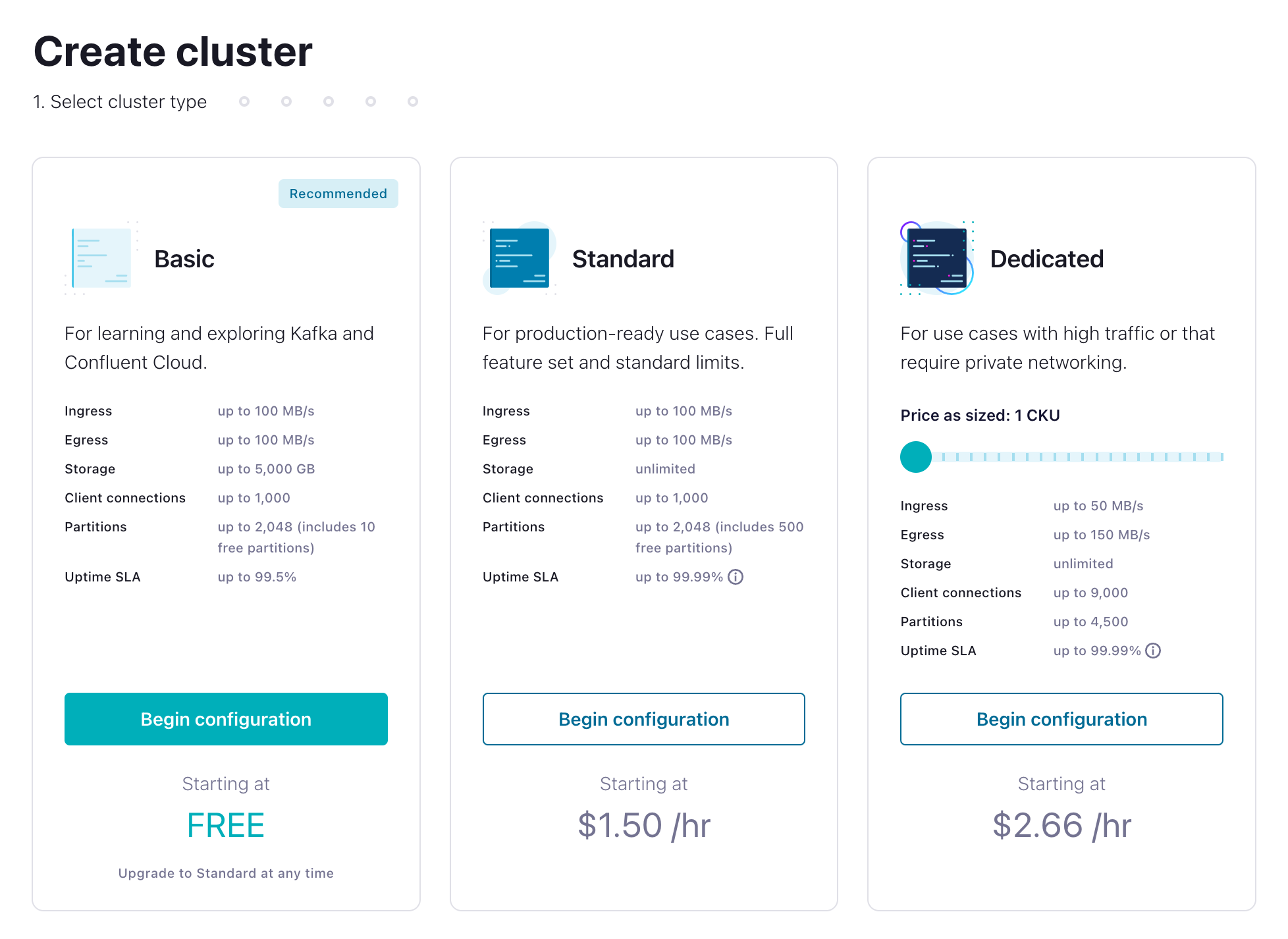

- Inside the building-flink-applications-in-java environment click Create cluster or Create cluster on my own. You'll be given a choice of what kind of cluster to create. Click Begin Configuration under the Basic cluster.

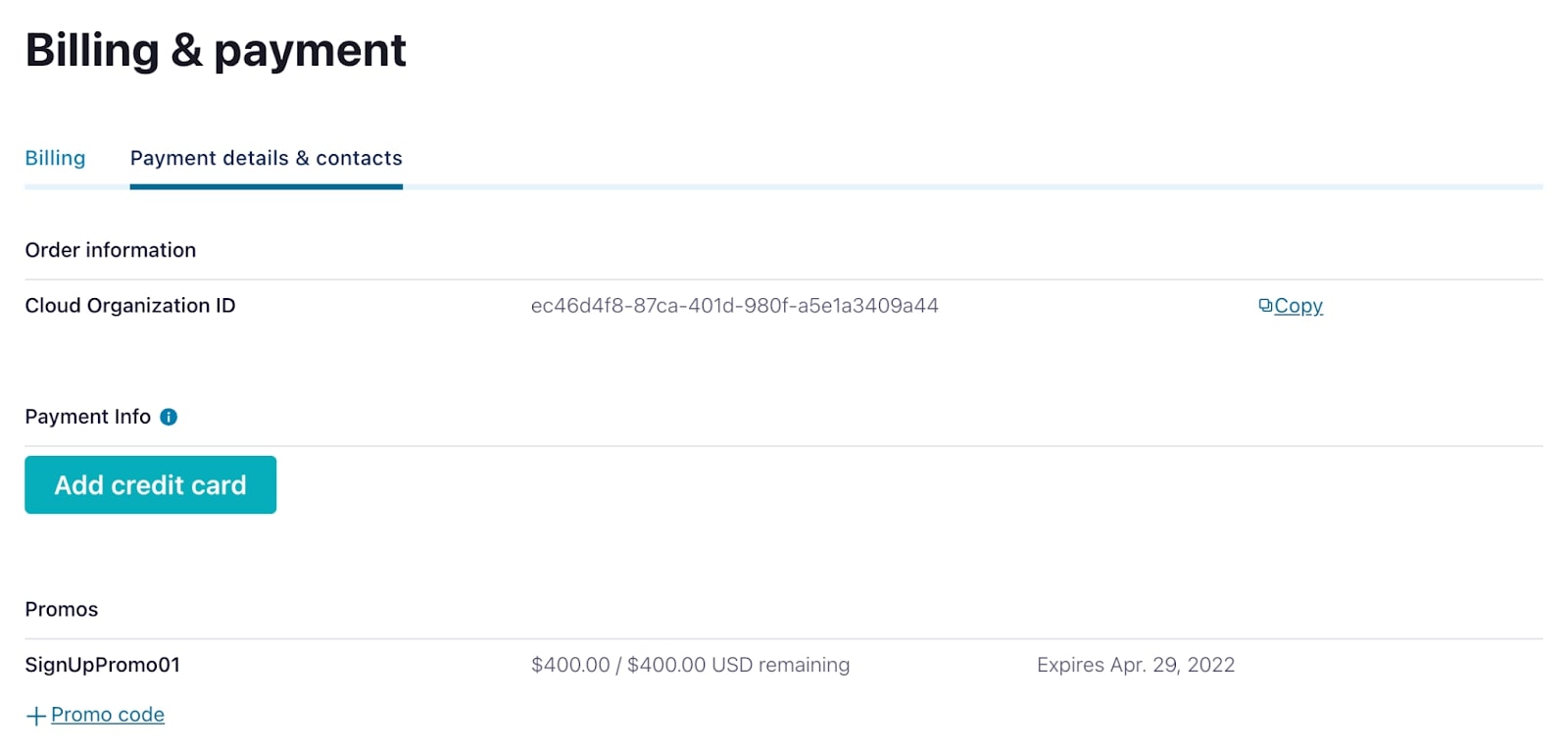

- Basic clusters used in the context of this exercise won't incur much cost, and the amount of free usage that you receive along with the promo code FLINKJAVA101 for $25 of free Confluent Cloud usage will be more than enough to cover it. You can also use the promo code CONFLUENTDEV1 to delay entering a credit card for 30 days.

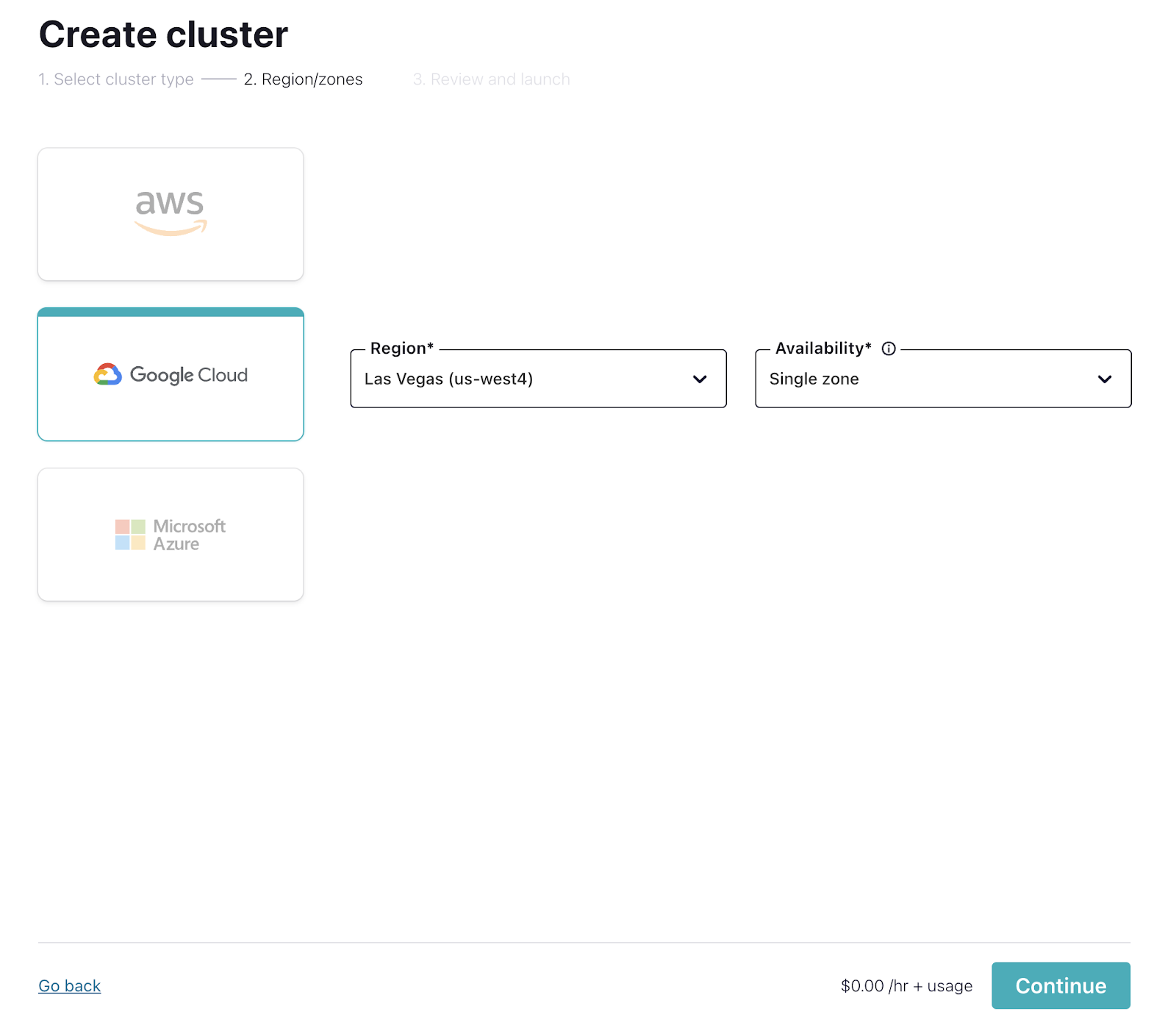

- On the next page, choose your cloud provider, region, and availability (zone). Costs will vary with these choices, but they are clearly shown on the dropdown, so you'll know what you're getting.

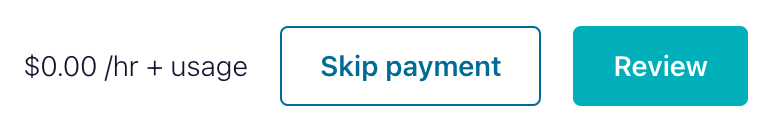

- If you haven't entered a credit card, you will be asked to enter your credit card information. Feel free to choose the Skip Payment option at the bottom of the screen. If you've already entered your credit card it will take you directly to the Review screen.

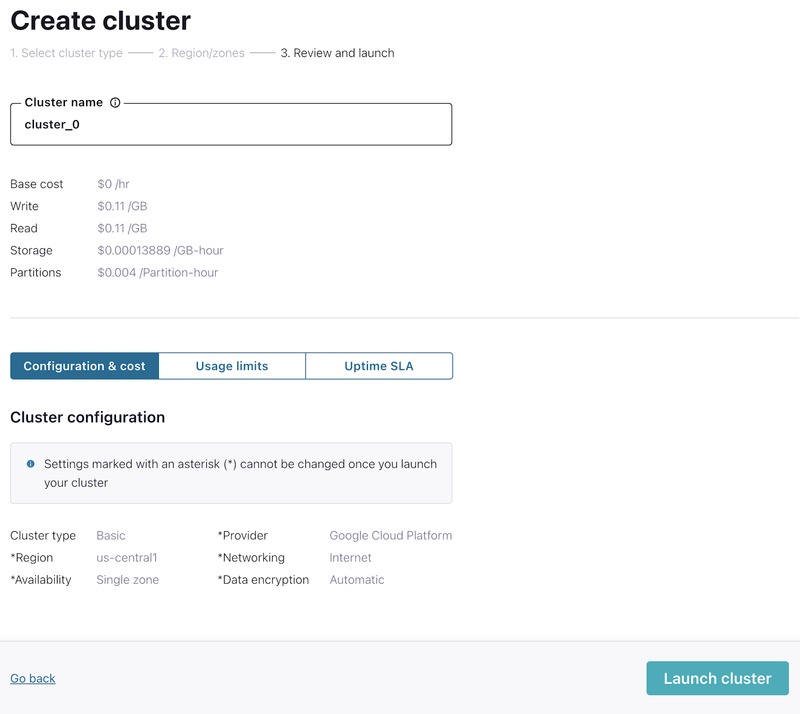

- Click Review to get one last look at the choices you've made. If everything checks out, give your cluster a name, and select Launch cluster.

- While your cluster is being provisioned, set up the FLINKJAVA101 promo code by navigating to Billing & payment from the settings menu in the upper right. On that screen, go to the Payment details & contacts tab to enter the promo code.

Command Line Reference

If you prefer, you can do this from the command line interface by running (Adjust the cluster name, cloud, and region settings as appropriate):

confluent kafka cluster create <Your Cluster Name> --cloud gcp --region us-central1 --type basicAdd an API Key

We will need an API Key to allow applications to access our cluster, so let's create one.

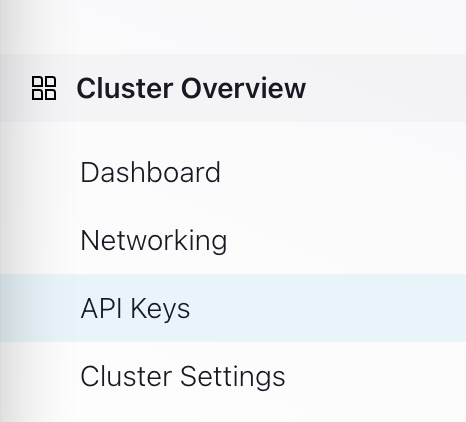

- From the left-hand navigation in your cluster, navigate to Cluster Overview > API keys.

-

Create a key with Global access.

Note: For secure production environments, you would want to select Granular access and configure it more securely.

-

Download and save the key somewhere for future use.

Command Line Reference

If you prefer, you can do this from the command line interface by running (Adjust the cluster name and Id as appropriate):

confluent api-key create --resource <Your Cluster Id> --description <Your Cluster Name>-key -o json > <Your Cluster Name>-key.jsonSetup Flink

If you have chosen Gitpod as your development environment, then you can skip this step as Flink has already been installed.

If you are developing on your local machine, you will need to install Flink locally.

Install Flink Locally

Inspect the install_flink.sh file which downloads and extracts a suitable version of Flink. You can execute the script as is or run the individual commands yourself.

Note: Future instructions in the course will be assuming that you have a Flink installation at learn-building-flink-applications-in-java/flink-<flink-version>. If you install Flink in an alternate location you may need to adapt the instructions.

Navigate to the Flink bin folder.

cd flink-*/binRun your Flink cluster:

./start-cluster.shAnd run three task managers by executing the following command 3 times:

./taskmanager.sh startEnsure Flink is running by navigating to http://localhost:8081.

Finish

This brings us to the end of this exercise.

Use the promo codes FLINKJAVA101 & CONFLUENTDEV1 to get $25 of free Confluent Cloud usage and skip credit card entry.

Be the first to get updates and new content

We will only share developer content and updates, including notifications when new content is added. We will never send you sales emails. 🙂 By subscribing, you understand we will process your personal information in accordance with our Privacy Statement.